Hadoop Foundation III: Laying the architecture & tools that compliment Hadoop

You can also access Part I & Part II of this series “Laying the foundation of a data-driven enterprise with Hadoop“.

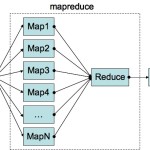

Hadoop platform has performed well in the batch interactive as well as the real-time data processing if the core is Apache Hadoop.

Recently, Hortonworks launched a new technology called Apache NiFi. It was created at the national security agency (NSA), U.S. It was called the Niagara falls and then turned into open source at the end of 2014 as the top level project for Apache today. That is really for managing data closer to its inception and through its flow and as it navigates to the system that it needs to get to; whether it gets stored at a Hadoop platform or whether a real-time analysis is applied to it as it is flowing. So, you get a very good combination of deep historical insights from a full fidelity of history as well as the ability to draw perishable insights that are here now and that combination of a feedback loop is important as people lay the foundation of a data-driven enterprise.

So, you get a very good combination of deep historical insights from a full fidelity of history as well as the ability to draw perishable insights that are here now and that combination of a feedback loop is important as people lay the foundation of a data-driven enterprise.

What about the data security?

Finally, securing your data no matter what access engine you are using is also important. Whether you are using Hive to access data through SQL, or you are doing data discovery and machine learning (ML) and modeling using SPARK, or if you are doing real-time stream processing using KAFKA Storm and spark; it doesn’t really matter how you are interacting with the data, you want to make sure you are able to set-up a centralized security policy, and you are able to administer the policies, audit and who to give access and how you want to encrypt the data in flow and motion, as well as at rest.

There are newer technologies in the platform. In the case of Operations, Apache Ambari provides the requisite security. In the case of governance, there are technologies like Apache Atlas and Falcon.PARTING THOUGHTS

Parting thoughts

So, the benefits of bringing a platform, like YARN-based architecture, are that you are able to bring diverse workloads under your management, and you are able to add new data processing engines on top so the ease of expansion is future proof. The consistent services that you can apply in your data and governance, operations and security so you will have a consistent centralized operation particularly as you bring in new workloads and data. And the resource efficiency when you have a mixed set of workloads whether it is end users to use tools to issue SQL or using SPARK to discover new patterns or values. Vendors like SAS and Microsoft can run natively in Hadoop. It ensures that they are not monopolizing resources of other workloads and it is an integrated operation.

So, a modern architecture with Hadoop as its underpinning should be very much like traditional data systems with the security operations and governance that you expect. So, you can interoperate and integrate it with other tools for a range of use cases. You can use it from a broader perspective.

But, make sure that an integrated and efficient experience is important. We work in our quality box but ensure that we have strong integration capability.

So a modern architecture needs to have Hadoop with a modern set of tools that allow you to consolidate a wide range of data sets. So, you need to deliver real-time and historical insights in an integrated way. Build a closed loop analytics system when you need to integrate that with a broader set of tools in your data center.

The article is based on Hortonworks webinar titled “Laying the foundation for a Data-Driven Enterprise with Hadoop”. It can be accessed here.