Effective Ways to Handle Privacy & Cybersecurity Risks In An AI Business World?

The question might seem basic, but it addresses one of the most significant challenges companies globally are dealing with. This question holds immense importance because, despite efforts to strengthen digital systems over the past few decades, the prevalence of cybersecurity threats continues to be alarmingly high.

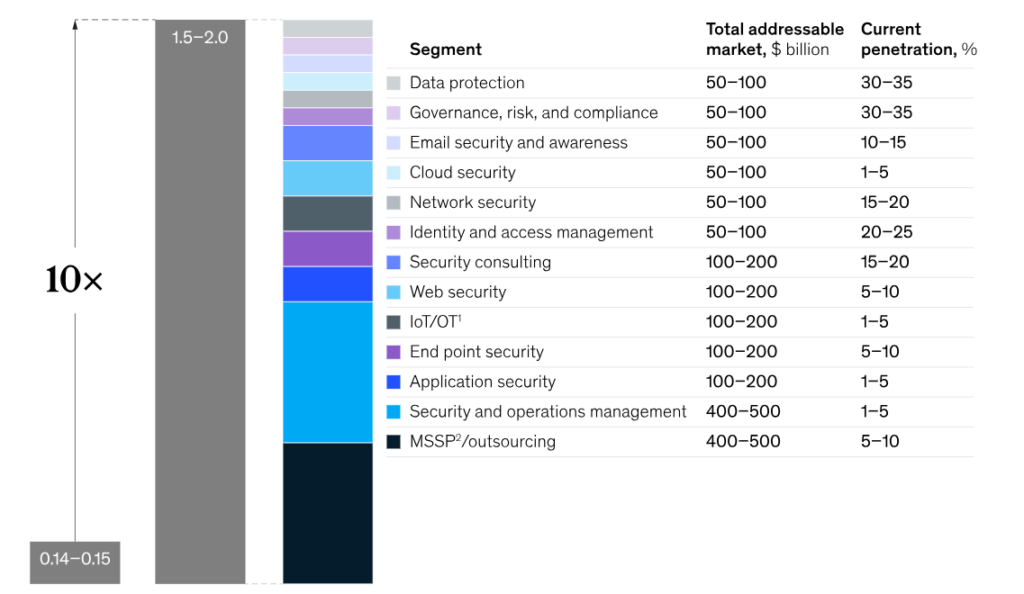

In 2022, there were a staggering 4,100 data breaches that were made public. These breaches led to the exposure of around 22 billion records. What’s surprising is that this happened even though companies worldwide invested a record amount of $150 billion in cybersecurity in 2021.

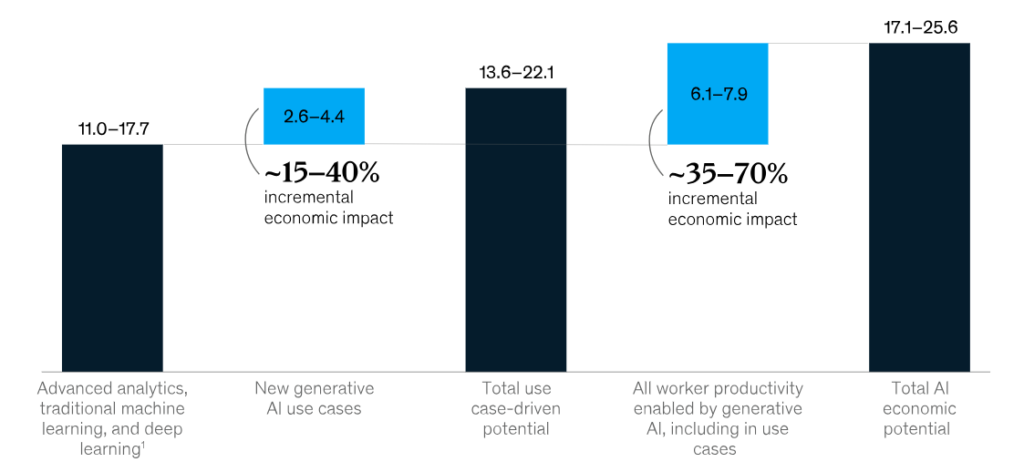

Since the launch of ChatGPT last year, there has been a flood of articles, white papers, and podcasts discussing the spread of AI tools and what they can do. As its popularity grows, about 35% of companies are currently making use of AI, and another 42% are considering how it could be used in the future. What’s really interesting about generative AI is its impact on the global economy, its potential for growth, and how it’s changing the way businesses work.

According to a recent study by McKinsey, they looked at 63 different ways generative AI could be used and found that it might contribute anywhere from $2.6 trillion to $4.4 trillion each year to the global economy.

Balancing Risk & Reward

However, as we consider the applications of this technology across various functions – from operations to software engineering – it’s crucial for us, as C-suite leaders and corporate directors, to strike a balance between the risks and rewards that come with adopting these new capabilities. Industry experts emphasize the importance of maintaining this equilibrium, especially in areas like consumer privacy and cybersecurity.

C-suite leaders should strongly advocate making consumer data privacy and governance standards a top priority when integrating new AI tools and applications into business strategies. Since AI models learn from vast amounts of data during their training, businesses must vigilantly monitor the potential exposure risk of personally identifiable information (PII).

As these models evolve and gain greater dynamism, there’s a potential risk of unintentionally collecting PII through interactive engagement or connections. Regardless of the method, whether PII is directly included or indirectly inferred, it’s vital to recognize that privacy could be compromised by malicious entities through breaches, hacks, theft, and phishing – essentially, the challenges we face today but evolved and magnified.

Mitigating Privacy Risks

To mitigate these privacy risks effectively, it’s essential to set up an AI steering committee involving key stakeholders from various domains like business, data science, analytics, information security, and information technology. This committee plays a pivotal role in creating and assessing practices, documentation, and policies related to crucial areas such as model transparency, third-party data access, and guidelines for data storage and deletion.

Framework Based On Risk Appetite

Developing a structured approach to evaluate the integration of AI tools into your business operations and pinpoint privacy vulnerabilities is just as crucial as recognizing the growth and profitability possibilities that AI can bring. Experts suggest to establish a foundation risk assessment and encourage leadership to determine and rank the risks according to their risk tolerance and the balance between potential rewards.

Cybersecurity Practices & Protocols

When evaluating new AI tools and platforms, it’s crucial to also consider cybersecurity practices and protocols. Given that AI models process vast amounts of data, they can attract malicious individuals seeking security weaknesses to steal confidential information or deploy malware.

Also Read This: Top 13 Tech Vulnerabilities That Could Cost Businesses Millions

Similar to an account takeover (ATO) scenario, where hackers illicitly access online accounts, bad actors aim to infiltrate trained AI models to manipulate the system, gaining entry to unauthorized transactions or personal data. These are just a couple of examples. As AI solutions become more intricate, vulnerabilities across models, training environments, production settings, and data sets will inevitably increase.

Key Takeaways

Crafting an AI strategy and execution plan demands thoughtful evaluation, including the measurement of potential business benefits and the associated risks. To effectively address concerns regarding privacy and cybersecurity, there are a handful of essential actions that top management can undertake, while board directors can seek clarification on. These measures play a vital role in positioning your organization for triumph.

1. Baseline Risk Management Assessment

An excellent initial move involves evaluating new AI tools and capabilities through the lens of privacy and cybersecurity. This assessment aims to identify gaps, specific vulnerabilities, or potential oversights in management frameworks. The findings from this assessment can provide valuable insights into the level of risk management readiness, risk appetite, and the suitable pace for integrating AI.

2. Build The Forums For Discussion

Promoting alignment and awareness within your corporate culture regarding the potentials and challenges tied to new AI initiatives and capabilities is also beneficial. Establishing suitable platforms such as working groups and steering committees can facilitate connections between different functions and business units, enabling the successful formulation of comprehensive processes and policies.